What if you could bring the power of AI into SQL Server without sending a single byte of data to the cloud? That is exactly what Ollama helps you achieve. In this guide, you will learn how to run advanced models such as Llama 3, Mistral, Phi-3, and Gemma locally on Windows and connect them with SQL Server through MCP to get smart, natural-language insights while keeping all your data completely private. Let us learn about Local AI Models for SQL Server – A Complete Guide.

The Client’s Requirement

A few months ago, one of my clients came to me with an interesting challenge. They wanted to use AI to summarize large reports, answer questions, and assist their analysts. However, their primary concern was data security. Their organization strictly prohibited sending client data to any external AI service, such as ChatGPT or other hosted large language models. They needed a system that could run securely within their own environment.

The goal was clear: build an AI solution that provides intelligent responses but never lets data leave the company’s firewall. This is where Ollama became the perfect choice. Ollama allows running advanced AI models like Llama 3, Mistral, Phi-3, and Gemma either locally (offline) or through cloud resources (online). It gives complete control over privacy, performance, and deployment style.

Why Choose Ollama

Ollama offers flexibility for both developers and enterprises. It can be installed on Windows, Linux, or macOS and supports local as well as cloud-based inference.

With Ollama, you can:

- Run models entirely offline so no information ever leaves your system.

- Use powerful cloud-hosted models when you need extra computing power.

- Integrate with your existing databases or applications using its local API.

For teams working with confidential data such as financial records, medical files, or internal reports, Ollama ensures that data stays under your control.

This fits perfectly with the architecture discussed in my article on SQL Server and AI – Setting Up an MCP Server for Natural Language Tuning, where models can interact safely with SQL Server using natural-language queries.

Supported Models for Local and Cloud Setup

Ollama supports many models that you can run either locally or in the cloud.

Local Models (Offline)

Local models are downloaded and executed directly on your computer. Once installed, they do not need an internet connection.

Examples include:

- Llama 3 – General-purpose reasoning model that runs smoothly on mid-range GPUs.

- Phi-3 Mini – Compact and efficient, suitable for systems with limited memory.

- Gemma 2B – Lightweight and fast model designed for CPUs and smaller devices.

These models are perfect for clients who require total privacy and offline access.

Cloud or Hybrid Models

Cloud models are useful when you want to use larger, more powerful models or distribute workloads across machines. Ollama can connect securely to cloud infrastructure that you control.

Examples include:

- Mistral 7B and Mixtral 8x7B – Excellent for complex reasoning, creative writing, and summarization.

- Llama 3 70B – Enterprise-scale model designed for cloud environments.

A hybrid setup allows you to run smaller models locally and call heavier models from the cloud when necessary.

System Requirements

To install Ollama on Windows, make sure your computer meets these basic requirements:

- Operating System: Windows 10 or Windows 11

- Processor: Intel or AMD or Nvidia CPU (a GPU helps for faster processing)

- Memory: Minimum 8 GB RAM, recommended 16 GB or higher

- Storage: At least 10 GB of free space for model files

- Internet Connection: Required only for the first download of models

Once the model is installed, it can run completely offline.

Step-by-Step Guide to Installing Ollama on Windows

Step 1: Download Ollama

Go to the official download page: https://ollama.com/download/windows.

Click on Download for Windows to get the installer file named OllamaSetup.exe.

Step 2: Install Ollama

Run the OllamaSetup.exe file. Follow the installation prompts on the screen. By default, it installs in your user directory, so you don’t need administrator access. After installation, Ollama might show an icon in your system tray or Start Menu.

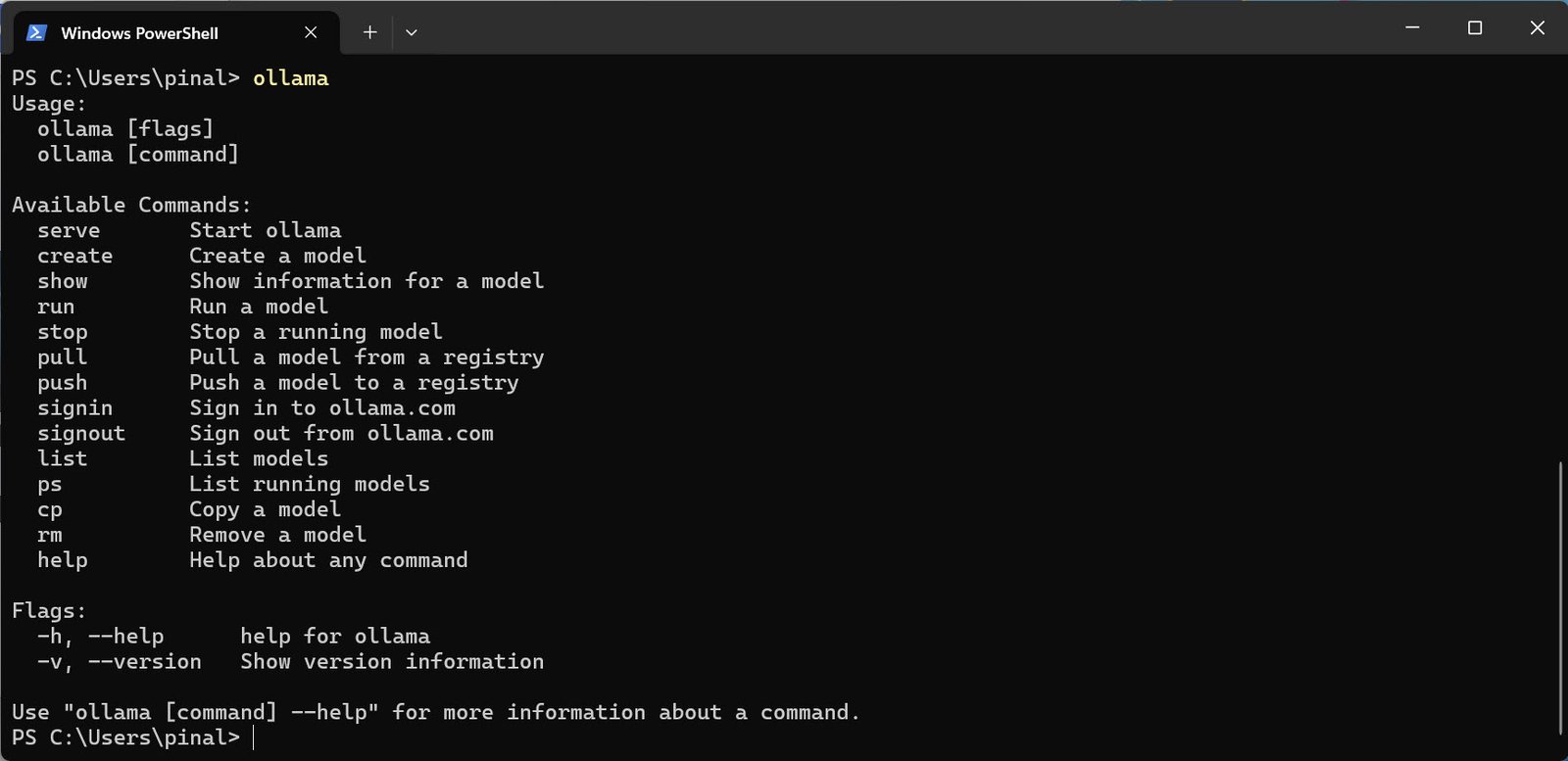

Step 3: Verify Installation

Open Command Prompt or PowerShell and type:

ollama

You should see the available commands such as run, pull, and list. If it says “command not recognized,” check your PATH settings or restart your system.

Step 4: Download Your First Model

You can now download a model for local testing. For example, run:

ollama pull llama3

This downloads the Llama 3 model.

You can also try:

ollama pull mistral ollama pull phi3 ollama pull gemma

After the model is downloaded, start it using:

ollama run llama3

You will see a prompt where you can type questions or commands, and the model will reply instantly using your local resources.

Step 5: Manage Models

You can manage your installed models with these commands:

ollama list # shows installed models ollama rm llama3 # removes a specific model ollama pull mistral # updates or adds another model

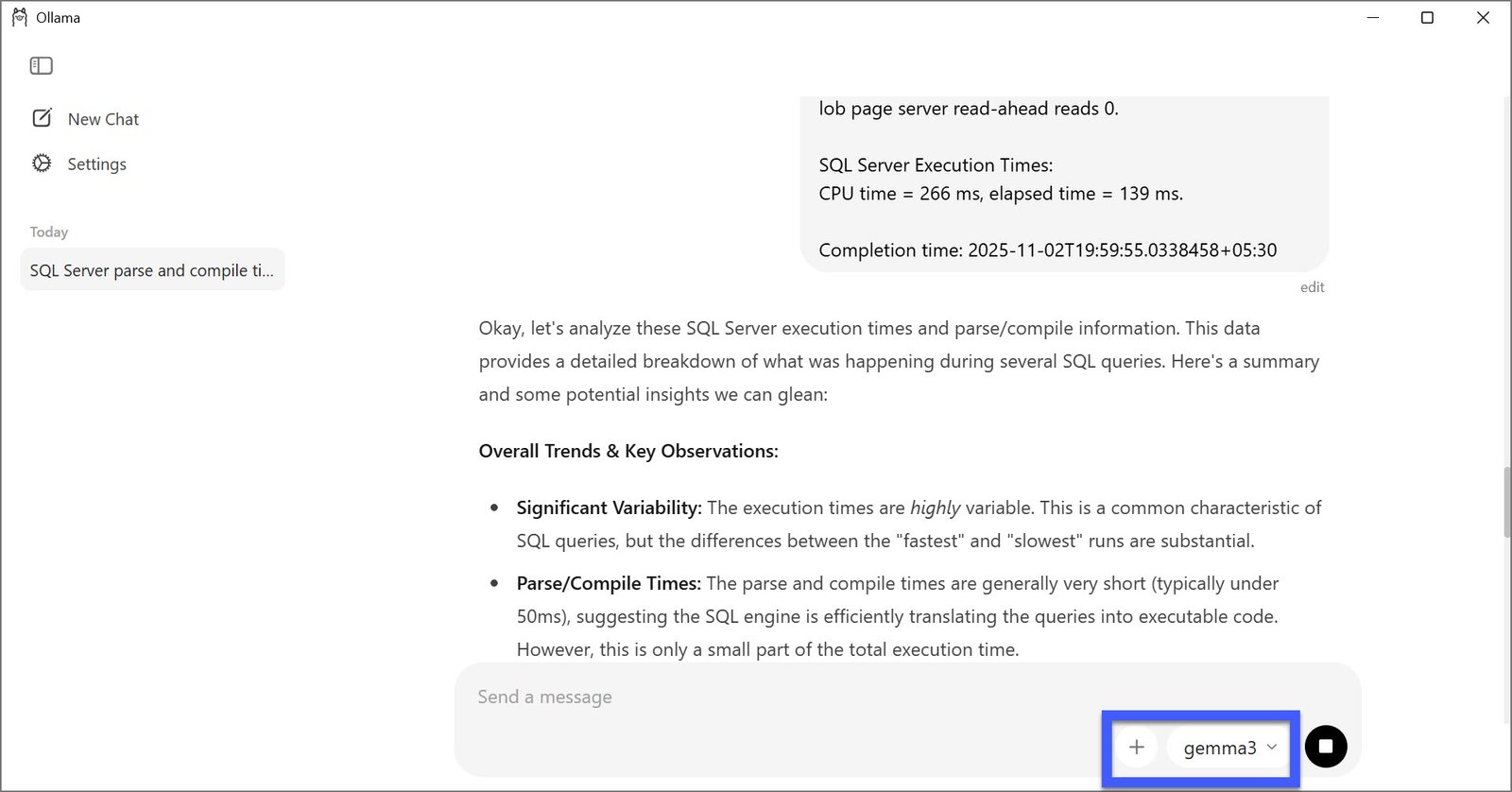

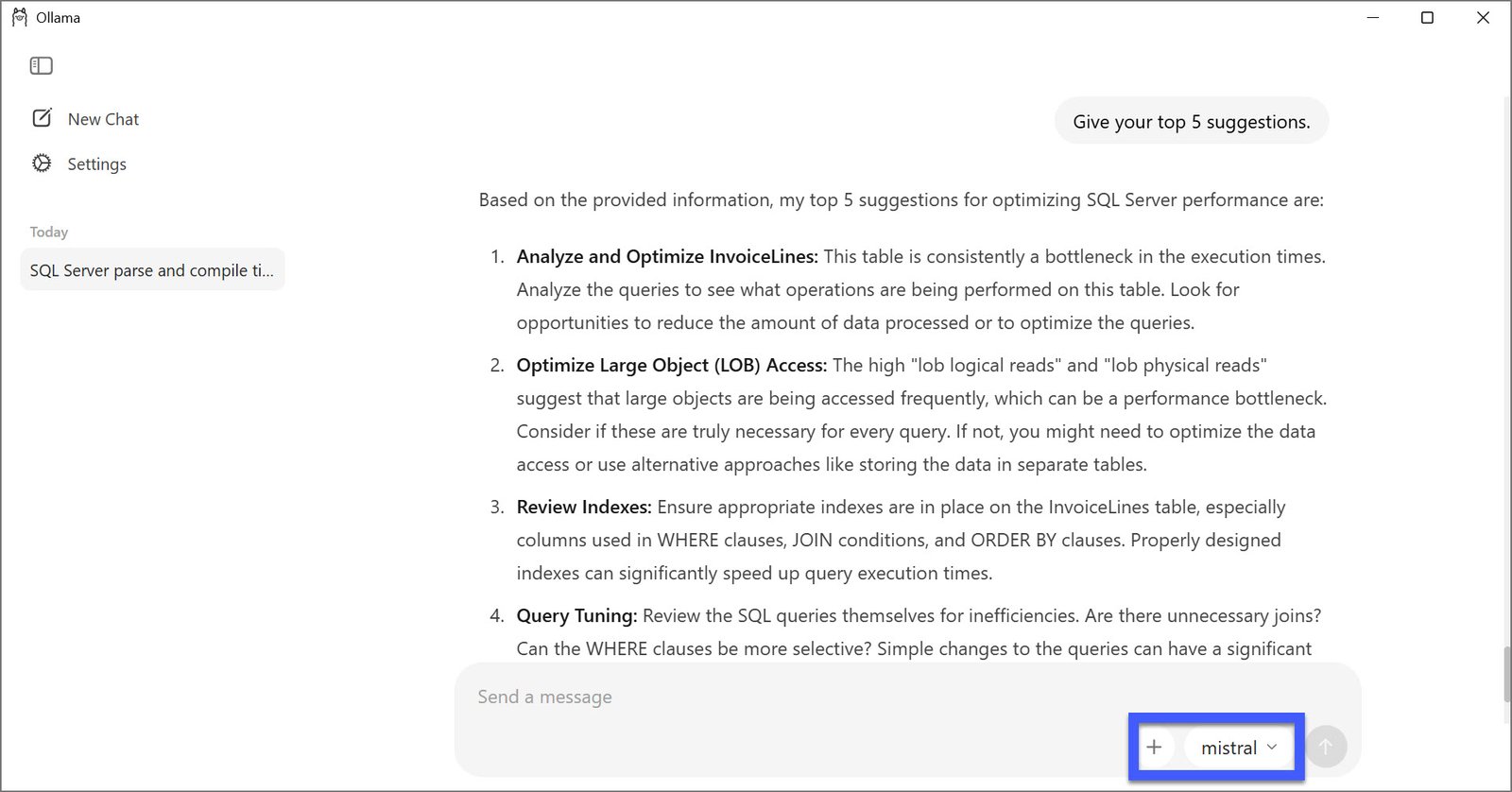

Using Ollama Through GUI or API

You can use Ollama in three different ways:

- Command Line: Run models directly using PowerShell or Terminal.

- Desktop App (GUI): A user-friendly interface where you can choose models, type prompts, and view outputs easily.

- Local API: Ollama runs a small server at

http://localhost:11434, which can be used by developers to integrate it with applications, dashboards, or chat interfaces.

Working Offline vs Cloud Mode

Local or Offline Mode

- All data processing happens on your computer.

- No internet access is required after the initial setup.

- Data stays within your network.

- Best choice for secure or air-gapped systems.

Smaller models like Phi-3 or Gemma 2B are ideal for offline usage since they run efficiently even on modest hardware.

Cloud or Hybrid Mode

- Useful when your local machine cannot handle large models.

- Ollama can connect to a secured cloud server where big models like Llama 3 70B or Mixtral 8x7B are deployed.

- Data can be encrypted in transit to ensure security.

- A hybrid approach can balance cost, speed, and privacy by using both local and cloud resources.

Integration with SQL Server and MCP

If you are exploring ways to make SQL Server more intelligent, my detailed guide on SQL Server and AI – Setting Up an MCP Server for Natural Language Tuning explains how to combine the power of AI models with database automation. The article walks through how the Model Context Protocol (MCP) acts as a secure bridge between language models and SQL Server, allowing natural-language queries without ever exposing confidential data. Instead of sending your questions to a public API, the model runs within your trusted environment, interprets the user’s request, and uses well-defined tools inside MCP to fetch results safely from SQL Server.

This setup transforms how teams interact with databases. You can ask plain questions such as “Show me the databases with the highest WRITELOG waits in the last hour,” and the local model, through MCP, uses approved commands to get the data. The language model (for example, Llama 3 or Phi-3) never connects directly to SQL Server. It simply understands the intent, triggers the right internal procedure, and presents an easy-to-read summary of the findings. This combination of Ollama, MCP, and SQL Server gives you a secure, private, and intelligent way to use AI for performance tuning and insights within your own network.

Best Practices for a Smooth Setup

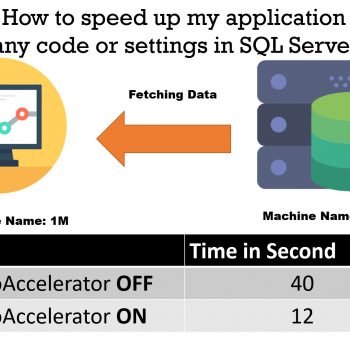

- Use an SSD or NVMe drive to store your models for faster load times.

- Set the

OLLAMA_MODELSenvironment variable if you want to change the default model directory. - Keep your GPU drivers updated for better inference speed.

- Monitor system memory usage while running large models.

- Always test your prompts and results before using the system in production.

- Keep regular backups of your model files.

- Use smaller models for general tasks and reserve large ones for detailed workloads.

- Ensure your system has the latest Windows updates and security patches.

Conclusion

Ollama on Windows provides a secure and flexible way to use AI without depending on external services. It respects your client’s privacy by running models fully offline, yet still supports cloud or hybrid setups when you need more computing power.

You can choose from models like Llama 3, Mistral, Phi-3, and Gemma based on your hardware and project size. When integrated with SQL Server using MCP, it allows natural-language access to databases while keeping every piece of data safe inside your environment.

Connect with me on Twitter.

Reference: Pinal Dave (https://blog.sqlauthority.com)

8 Comments. Leave new

Hi Dave,

Can you please provide some tsql code using ollama assuming AdventureWorksDW database for example which kind of questions you can ask (I found hard to find any tsql example)

I followed your instruction now what?

Thanks,

Oded Dror

Thanks for the post, however, you didn’t explain how to connect Ollama to the MCP Server and your other article “SQL Server and AI – Setting Up an MCP Server for Natural Language Tuning” doesn’t mention Ollama, but how to connect to the MCP-Server using another LLM. Would you go over how you connected Ollama to the MCP-Server?

That can be totally done by Python coding. I have already have demo for running at client but it is not possible to blog it as it is more like a 30 minutes video course.

Is that demo posted anywhere? Thanks!

In Dec I plan to create vide about it.

Hi,

How can I use local SQL server 2014(Express) + AI for my application, where Client might not have powerful PC configuration. It should be light weight. My application is ERP. where user can ask Question or model should alert user like on Dues, Inventory levels etc. Thanks

Hi

As Oded Dror says, what’s next ?

By reading the title of your post, I said to myself : Finally someone that explains how to query SQL using natural language … like “Hi, please give me a summary of sales of “product1″ for 2025 compared to 2024. Also sumarize by bla bla bla …”

Then AI would generate the query, return the query from an API and me the programmer with receive the query , run it and present the resulting dataset the way I choose.

But in order to do this, we must tell AI the data model (joins between tables and the purpose of each table”) I do not intend AI to connect to Sql and detec automatically the data model because I know of any ERP system having their model defined in Sql. THeir mopdel is defined in documentation not in Sql. This is the obscure information I have been seeking.

This is fascinating. Understanding the data being presented in SQL may make sense for a data analyst or data scientist, but being able to convert that information into more impactful business decisions was made far easier. When would it be most secure online? When would it be most useful to have it online? How big a model would make it smartest to convert it to the cloud? I want to deploy this with my own SQL project. This would make it even easier to visualize before converting it to Tableau.