Data Quality Services is a very important concept of SQL Server. I have recently started to explore the same and I am really learning some good concepts. Here are two very important blog posts which one should go over before continuing this blog post about Data Quality Services.

- Installing Data Quality Services (DQS) on SQL Server 2012

- Connecting Error to Data Quality Services (DQS) on SQL Server 2012

This article is introduction to Data Quality Services for beginners. We will be using an Excel file

Click on the image to enlarge the it.

In the first article we learned to install DQS. In this article we will see how we can learn about building Knowledge Base and using it to help us identify the quality of the data as well help correct the bad quality of the data.

Here are the two very important steps we will be learning in this tutorial.

- Building a New Knowledge Base

- Creating a New Data Quality Project

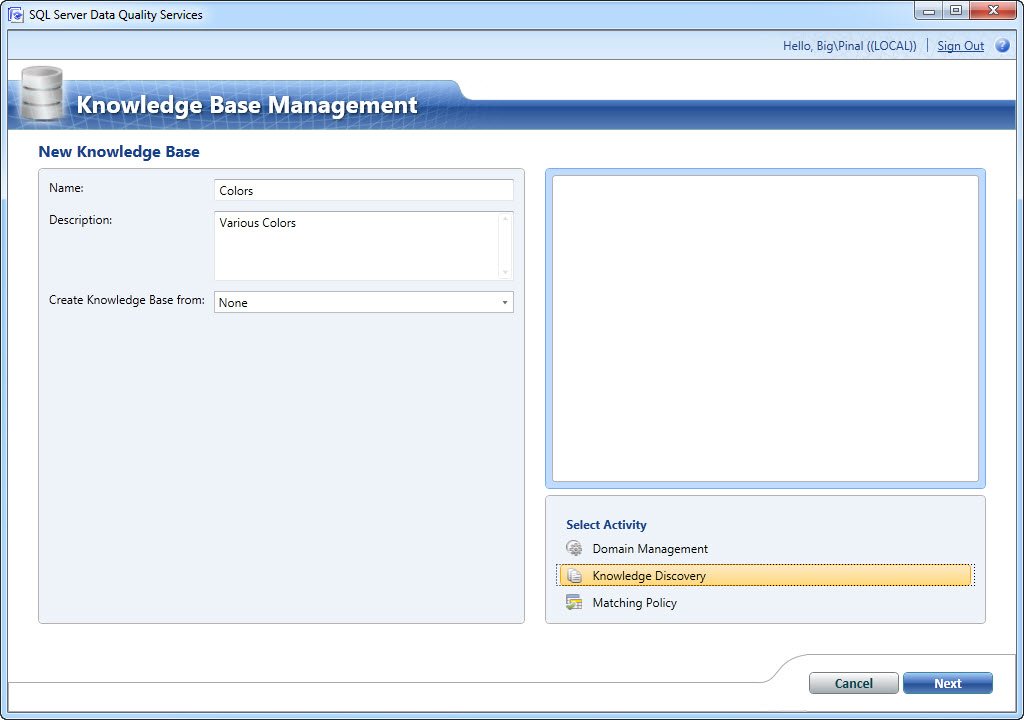

Let us start the building the Knowledge Base. Click on New Knowledge Base.

In our project we will be using the Excel as a knowledge base. Here is the Excel which we will be using. There are two columns. One is Colors and another is Shade. They are independent columns and not related to each other. The point which I am trying to show is that in Column A there are unique data and in Column B there are duplicate records.

Clicking on New Knowledge Base will bring up the following screen. Enter the name of the new knowledge base.

Clicking NEXT will bring up following screen where it will allow to select the EXCE file and it will also let users select the source column. I have selected Colors and Shade both as a source column.

Creating a domain is very important. Here you can create a unique domain or domain which is compositely build from Colors and Shade.

As this is the first example, I will create unique domain – for Colors I will create domain Colors and for Shade I will create domain Shade.

Here is the screen which will demonstrate how the screen will look after creating domains.

Clicking NEXT it will bring you to following screen where you can do the data discovery. Clicking on the START will start the processing of the source data provided.

Pre-processed data will show various information related to the source data. In our case it shows that Colors column have unique data whereas Shade have non-unique data and unique data rows are only two.

In the next screen you can actually add more rows as well see the frequency of the data as the values are listed unique.

Clicking next will publish the knowledge base which is just created.

Now the knowledge base is created. We will try to take any random data and attempt to do DQS implementation over it. I am using another excel sheet here for simplicity purpose. In reality you can easily use SQL Server table for the same.

Click on New Data Quality Project to see start DQS Project.

In the next screen it will ask which knowledge base to use. We will be using our Colors knowledge base which we have recently created.

In the Colors knowledge base we had two columns – 1) Colors and 2) Shade. In our case we will be using both of the mappings here. User can select one or multiple column mapping over here.

Now the most important phase of the complete project. Click on Start and it will make the cleaning process and shows various results.

In our case there were two columns to be processed and it completed the task with necessary information. It demonstrated that in Colors columns it has not corrected any value by itself but in Shade value there is a suggestion it has. We can train the DQS to correct values but let us keep that subject for future blog posts.

Now click next and keep the domain Colors selected left side. It will demonstrate that there are two incorrect columns which it needs to be corrected. Here is the place where once corrected value will be auto-corrected in future.

I manually corrected the value here and clicked on Approve radio buttons. As soon as I click on Approve buttons the rows will be disappeared from this tab and will move to Corrected Tab. If I had rejected tab it would have moved the rows to Invalid tab as well.

In this screen you can see how the corrected 2 rows are demonstrated. You can click on Correct tab and see previously validated 6 rows which passed the DQS process.

Now let us click on the Shade domain on the left side of the screen. This domain shows very interesting details as there DQS system guessed the correct answer as Dark with the confidence level of 77%. It is quite a high confidence level and manual observation also demonstrate that Dark is the correct answer. I clicked on Approve and the row moved to corrected tab.

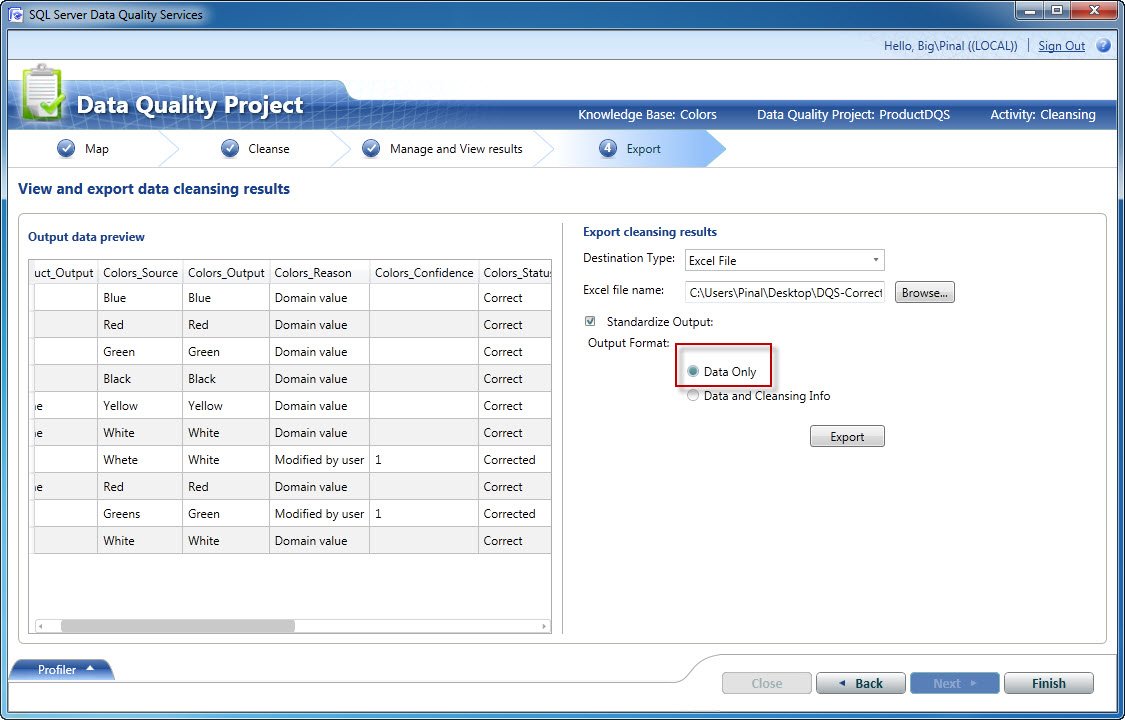

On the next screen DQS shows the summary of all the activities. It also demonstrates how the correction of the quality of the data was performed. The user can explore their data to a SQL Server Table, CSV file or Excel.

The user also has an option to either explore data and all the associated cleansing info or data only. I will select Data only for demonstration purpose.

Clicking explore will generate the files.

Let us open the generated file. It will look as following and it looks pretty complete and corrected.

Well, we have successfully completed DQS Process. The process is indeed very easy. I suggest you try this out yourself and you will find it very easy to learn. In future we will go over advanced concepts.

Are you using this feature on your production server? If yes, would you please leave a comment with your environment and business need. It will be indeed interesting to see where it is implemented.

Reference: Pinal Dave (https://blog.sqlauthority.com)

26 Comments. Leave new

Thanks a lot for this valuable information. Here is also some more information about DQS in SQL 2012.

Thanks

Manish

Thanks for sharing. Really useful information.

Nice information,

But what are the practical use of it in production servers.

Joining the question above – I’d love to hear some ‘real-world’ examples of DQS usage.

Also, is it possible to use as an automatic process, or does it always have to be ad-hoc?

A very useful introduction to DQS. Do you find that the lines between MDS and DQS have blurred and have you written on this subject at all?

Very nicely introduced DQS, we are planning to implement this in production, we are a health care company and with recent health law changes we need to have an integrated view of our patients across multiple patient applications service different lines of business. In this scenario the biggest challenge is how do you match your patients across different applications and come up with one version of the patient when no two systems have the same unique identifier. To solve this problem we are planning on using DQS and train it with the rules at the end of which we are hoping to uniquely identify the patient. Do not know if there are any other better options out there if anyone knows please do comment. Option of trickling the cleansed data back to the source is not acceptable and cannot be enforced, not all source systems have SSN or Medicaid or Medicare numbers….

Philips,

I understand your need for uniquely identifying individuals, but don’t quite see how this solution helps you toward your goal. While I understand how it may standardizing things like city and state names, I’m not sure how it would help to define uniqueness between patients.

Thanks for the sharing this nice introduction to DQS.

Even when this kind of projects can reach an automatic execution, they will always need the human factor to decide which values are real and which are not.

That is why it is nice to see a tool that can really be used by power users, who will be doing this kind of stuff.

Thanks for sharing DQS knowledge.

Good to know about the new features ,but it seems a bit impractical since in database we have a list of valid entries for a column and users have to opt a valid value for a column from a combo box which is actually connected to a look up table ,so this kind of error will never happen.I would like to hear about useful use of this feature.

Nice introduction post indeed. However my question will be on the difference between DQS and MDS (Master Data Services). My other question is on the issue whereby you do not actually need to correct the source data but all you will need to do will be just map the incorrectly spelt data to the correct ones. For example if you have Jo, Joe, Johhny in the source and require all these three to just be mapped to the correct Master Data value John. Will it be possible to represent such a scenario using DQS or MDS?

Thank You Pinal. As always you bring new features to light with such simplicity and ease. Makes it more interesting to learn.

Thanks, Pinal,

Clear. Simple. Appreciated.

Have you used it with hindi language(nvarchar) with really large table of say 20+ lakh record for matching?? I am finding it difficult to prepare a correct knowledge-base. Any help would be appreciated

Thanks for sharing. Straight forward & clear. So appreciated.

Thanks Pinal for sharing info with us

Its realy nice feature for data cleansing.

Can we automate this process like auto creating knowledge base ?

Hi Pinal,

Your blog is very description and on the topic. I am a big fan of your blog. Thanks very much.

I am glad you liked it.

Hi Pinal,

I have been using your blog from very long time, and I must say your articles are very helpful.

I like you this artilce as well :) I have a question for you.

Can I use this Data Quality Service for between two tables.

I mean I want to map similar columns between two tables in to one table.

Thanks.

Pinal,

Thanks you for the post. It would also be good to have answers to interesting questions that have already been addressed above. Below I pointed out to the name, date and time the question was posted in this blog:

– yousef: October 25, 2013 12:32 pm

– David: November 6, 2013 6:58 pm.

– Gaurav Pareek: May 26, 2014 6:45 am

– Sudhir Pawale: February 4, 2015 12:24 pm

– Syed Asad Ahmed: April 8, 2016 8:06 pm

Good thing, worked like a charm (just like I did it two years ago … :)) … But what I missed at that time as well: after doing the actual corrections, I would have expected, that this knowledge is saved and will not be asked again, if I re-run the process. Am I wrong?

I think it should.

can you plz tell about some thing Master data services in sql server 2016 ?