Using SQL Server functions in the WHERE clause of your queries can significantly impact performance. Let us explore that in this blog post.

SQL SERVER – Performance: OR vs IN – A Summary and Further Reading

The varied responses led me to write a detailed blog post to clarify the correct answer. If you missed it, you can read it here. Let us see A Summary and Further Reading.

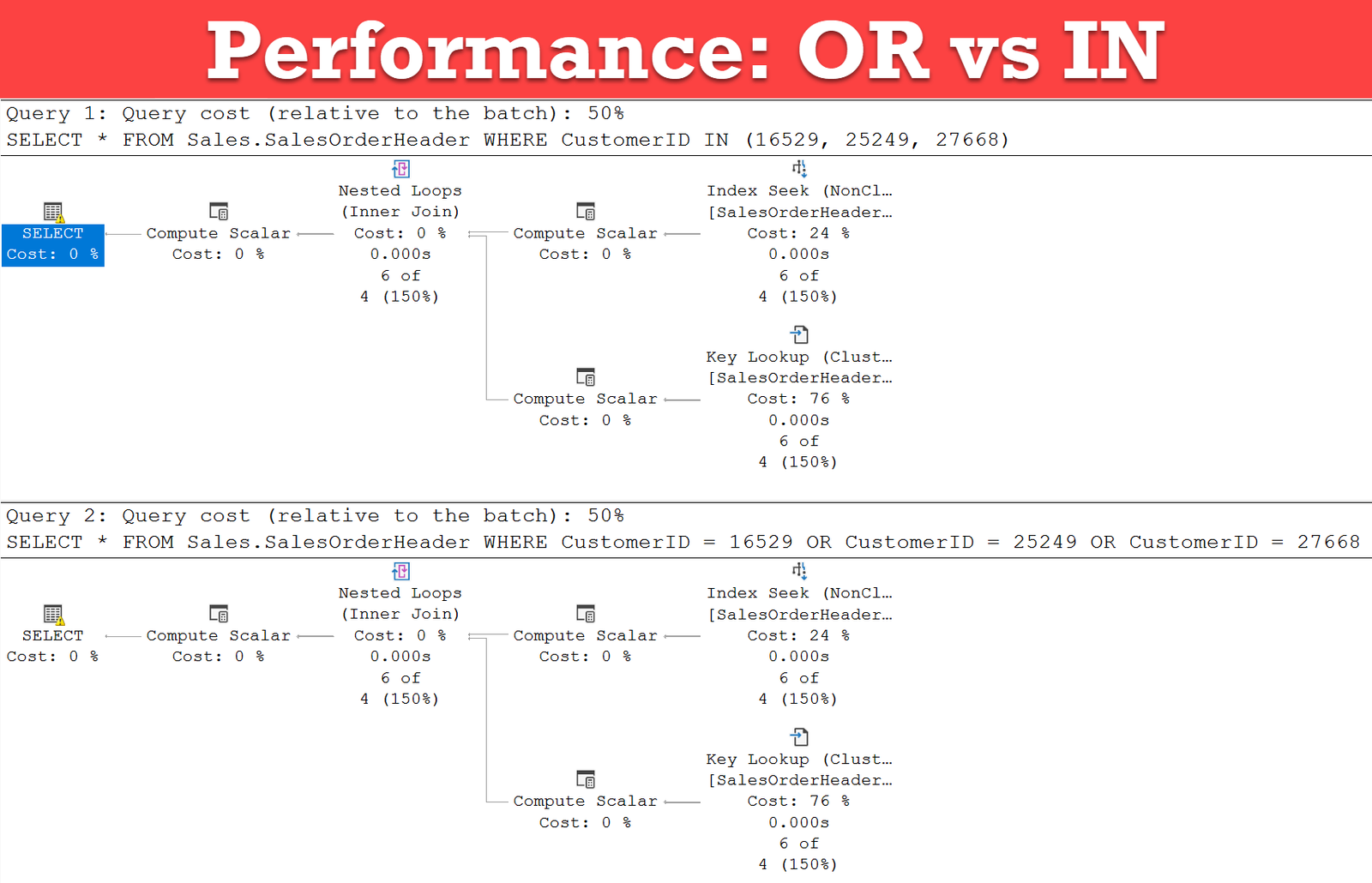

SQL SERVER – Performance: OR vs IN

I recently asked on Twitter an exciting question about OR vs IN. While I got lots of good answers, there were an equal amount of wrong answers.

SQL SERVER – The Comprehensive Guide to STATISTICS_NORECOMPUTE

One such feature that plays a pivotal role in managing your SQL Server databases’ performance is the STATISTICS_NORECOMPUTE option.

SQL SERVER – Understanding MAX_DURATION in Index Creation

One particular option that can be specified during the index creation is MAX_DURATION. This blog post will explore this option.

MySQL’s Index Condition Pushdown (ICP) Optimization

MySQL enhances its performance through an optimization technique called Index Condition Pushdown (ICP). Let’s delve into the depths of ICP.

SQL SERVER – Making Recursive Parent-Child Queries Efficient

One puzzle is finding out how many employees each manager is responsible for in a company. Let us Make Recursive Parent-Child Queries Efficient.