Today, I’d like to examine how in-memory OLTP engines to vastly improve performance and scalability of SQL Server databases and to accelerate applications. A very large part of this article focuses specifically on SQL Server 2014 and its new In-Memory OLTP capabilities.

So, let’s dive in.

Achieving fast application response times, high performance and database scalability is critical. Typically, we may have used two approaches looking for performance gains:

- Basic application and database read-write code tuning and redesign

- Deploying high-spec hardware servers and storage

Both techniques have limitations. Code re-writes and database redesign cban be lengthy processes, and can introduce bugs into otherwise stable systems. Bigger hardware and specialized storage technologies can be expensive. And upgrading to larger hardware can also cause increased software licensing costs.

In-Memory to the rescue

In-memory solutions are becoming increasingly popular and software development professionals have started to embed different kinds of In-Memory solutions. Caching prevents the cached queries from hitting the database at all, thus eliminating the locks, blocks and spinlocks and drastically reducing CPU, Memory and I/O load. The benefit is that storing, accessing and maintaining data IN-MEMORY (instead of on disks) greatly improves application performance and helps Dev and IT teams meet SLA goals.

There are a few different flavors of in-memory technology. Let’s take a look.

Data-Grids (IMDG) and Distributed Caching Solutions

For developers building new applications, a popular approach is to use API/code based Data-Grids (IMDG) and Distributed Caching solutions. Such solutions enable complex parallel processing, event processing and generally help to build highly scalable, high performance applications (such as large social networks’ websites and real-time stock-trading platforms). But major code development effort is required. Developers are required to not only put (and get) data into In-Memory Cache but also to mimic database transactional logic. Maintaining this code can get complicated.

For most production databases and applications such major code changes are not a feasible option.

In-Memory Dynamic Caching

In-memory dynamic caching is another approach that is very well suited to accelerating live in-production applications and databases. In-memory dynamic caching does not require additional code in applications, or modifications to databases. This key advantage over Data Grids and Distributed Caching solutions (and over the SQL Server 2014 In-Memory OLTP engine, as we will see) makes it much easier technology to deploy. Because there is no requirement to touch app-code, it also means this approach will accelerate 3rd-party applications that use SQL Server, like SharePoint or Dynamics.

With in-memory dynamic caching, all queries and stored procedures are analyzed and managed automatically: their logic and dependent objects (like tables, views, triggers, procedures, etc.) are identified and analyzed. All read-based queries and procedures leverage the in-memory cache. Write commands are monitored and cache invalidation is applied in real-time.

Because in-memory dynamic caching is the easiest solution to deploy currently, I’ll take a closer look at the leading vendor in this category later in this article. But let’s first take a hard look at in-memory OLTP from SQL Server 2014.

Introduction to SQL Server 2014 In-Memory OLTP

Microsoft’s newly released SQL Server 2014 features the In-Memory OLTP engine (some even define this as a revolution).

I want to examine SQL Server 2014 In-Memory OLTP from different angles: how to start using it, provide directions for migration planning, review closely many of its limitations, discuss SQL 2014 In-Memory OLTP applicability and see where the SQL Server In-Memory OLTP can be an alternative to in-memory dynamic caching, and where it is complimentary.

SQL Server 2014’s biggest feature is definitely its In-Memory transaction processing, or in-memory OLTP, which Microsoft claims make database operations much faster. In-memory database technology for SQL Server has long been in the works under the code name “Hekaton”.

Microsoft built its new In-Memory OLTP query processing engine from scratch, using a new lock-free and latch-free design. This is a key differentiator from alternatives such as pinning tables with DBCC PINTABLE or from putting databases on SSDs. While “DBCC PINTABLE” places a table in SQL Server’s buffer pool and the SSDs provide higher I/O, relational locks and latches still exist.

SQL Server 2014 In-Memory OLTP engine uses a new optimistic multi-version concurrency control mechanism. When a row in a shared buffer is modified, the In-Memory OLTP engine makes an entirely new version of that row and timestamps it. This process is very fast because it’s done entirely in memory. The engine then analyzes and validates any updated rows before committing them. This design is faster and more scalable than the traditional locking mechanism used by SQL Server’s relational database engine because there are no locks or other wait-states that prevent the processor from running at full speed.

In order to start using the SQL 2014 In-Memory OLTP, a database must have certain tables (actually, the file groups used to store tables) declared as memory-optimized. The resulting table can be used as a conventional database table or as a substitute for a temporary table.

Additional speed improvements are made from the gains realized by keeping a table in memory. Reads and writes to an in-memory table only lock on individual rows, and even then, row-level locks are handled in such a way that they don’t cause concurrency issues. Stored procedures can also run in memory for more of a boost by having them compiled to native code.

Excellent performance for DML write commands (Inserts, Update, Delete)

In classic disk tables there is a latch contention: concurrent transactions are attempting to write to the same data page; latches are used to ensure only one transaction at a time can write to a page.

The In-Memory OLTP engine is latch-free; there is no concept of pages. Thus, concurrent transactions do not block each other’s inserts, thus enabling SQL Server to fully utilize the CPU.

Microsoft released benchmarks show the following performance difference for INSERT intensive tables (run on a server with 2 CPUs, with 24 logical cores):

| Disk-based tables | Memory-optimized tables | Performance difference | |

| 10,000,000 order inserts using 100 threads, with 100,000 inserts each | 1:31:11 | 0:01:49 | 50X |

Read (SELECT) commands can gain significant benefit as well. The new engine allows creating “Native” pre-compiled Stored Procedures can access data of memory-optimized tables and perform data changes. With the use of In-memory tables there are significantly less (but not zero) locks, blocks, spinlocks and of course the I/O scans.

Quick start guide to SQL Server 2014 In-Memory

Step #1: Add MEMORY_OPTIMIZED_DATA filegroup to enable in-memory OLTP for your Database:

IF NOT EXISTS (SELECT * FROM sys.data_spaces WHERE TYPE='FX')

ALTER DATABASE CURRENT

ADD FILEGROUP [AdventureWorks2012_mod] CONTAINS MEMORY_OPTIMIZED_DATA

GO

IF NOT EXISTS (SELECT * FROM sys.data_spaces ds JOIN sys.database_files df

ON ds.data_space_id=df.data_space_id WHERE ds.TYPE='FX')

ALTER DATABASE CURRENT ADD FILE (name='AdventureWorks2012_mod',

filename='$(checkpoint_files_location)AdventureWorks2012_mod')

TO FILEGROUP [AdventureWorks2012_mod]

Step #2: For memory-optimized tables, automatically map all lower isolation levels (including READ COMMITTED) to SNAPSHOT:

ALTER DATABASE CURRENT SET MEMORY_OPTIMIZED_ELEVATE_TO_SNAPSHOT = ON

Step #3: Create your specific Table(s) to be Memory_Optimized:

To use In-Memory OLTP, you define a heavily accessed table as memory optimized. You have to define the primary key settings advanced settings when you create the table (can’t be altered later).

Notice the HASH, BUCKET_COUNT and MEMORY_OTIMIZED settings:

CREATE TABLE [Sales].[SalesOrderDetail_inmem]( [SalesOrderID] UNIQUEIDENTIFIER NOT NULL INDEXIX_SalesOrderID HASH WITH (BUCKET_COUNT=1000000), [SalesOrderDetailID] [int] NOT NULL, [OrderDate] [datetime2] NOT NULL, [OrderQty] [smallint] NOT NULL, [ProductID] [int] NOT NULL INDEXIX_ProductID HASH WITH (BUCKET_COUNT=10000000), /* ... ... */ INDEX IX_OrderDate (OrderDate ASC), CONSTRAINT [imPK_SalesOrderDetail_SalesOrderID_SalesOrderDetailID] PRIMARY KEY NONCLUSTERED HASH ( [SalesOrderID], [SalesOrderDetailID] ) WITH (BUCKET_COUNT=10000000) ) WITH (MEMORY_OPTIMIZED=ON, DURABILITY=SCHEMA_AND_DATA)

Step #3 – Update statistics for memory-optimized tables:

UPDATE STATISTICS Sales.SalesOrderDetail_inmem WITH FULLSCAN, NORECOMPUTE

Step #4 – CREATE stored procedures to be “NATIVE_COMPILATION”:

Stored procedures that only reference Memory_Optimized tables can be natively compiled into machine code for further performance improvements.

CREATE PROCEDURE Sales.usp_InsertSalesOrder_inmem @SalesOrderID UNIQUEIDENTIFIER OUTPUT, @DueDate datetime2 NOT NULL, @OnlineOrderFlag bit NOT NULL, @Comment NVARCHAR(128) = NULL, @SalesOrderDetails Sales.SalesOrderDetailType_inmem readonly WITH NATIVE_COMPILATION, SCHEMABINDING, EXECUTE AS OWNER AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english') /* Your procedure code Your procedure code Your procedure code */ END

Migrating to SQL Server 2014 In-Memory OLTP

Migration to In-Memory OLTP has to be performed in a development environment and carefully tested. Your High-Availability design, Databases design, Tables schemas and data, stored procedures, business logic in the database and even application code – all may require many syntax changes to use In-Memory OLTP.

This is not a “click and migrate” process. It requires development cycles, application and database design and code changes.

The right way to use the In-Memory OLTP engine is:

- Plan your production database architecture. The In-Memory OLTP is very different and has many limitations in terms of H/A, Mirroring, Replications available functionalities;

- Plan carefully your new database (and possibly application) design;

- Migrate several specific tables and procedures that are good benefit candidates;

- Develop or change your business-logic to fit the new design;

- Test and evaluate;

- Deploy

To evaluate whether the In-Memory OLTP can improve your database performance, you can use Microsoft new AMR tool (Analysis, Migrate and Report). For helping with actual migration you can use the Memory Optimization Advisor for tables and the Native Compilation Advisor to help porting a stored procedure to a natively compiled stored procedure.

The AMR tool helps identifying the tables and stored procedures that would benefit by moving them into memory and also help performing the actual migration of those database objects. The AMR tool is installed when you select the “Complete” option of “Management Tools”, and is later accessed through SQL Server Management Studio (SSMS) in Reports –>> Management Data Warehouseà Transaction performance reports tool:

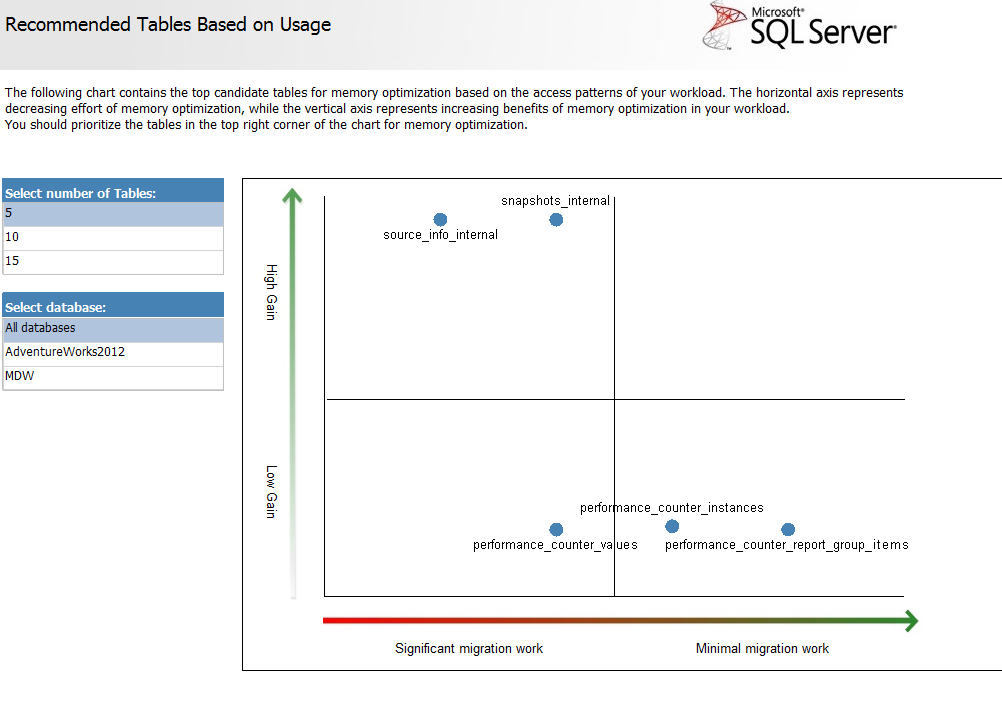

The AMR tool provides reports which tables and procedures can benefit the most from In-Memory OLTP and provide a hint how complex will be the conversion. The reports show either recommendations based on usage, contention and performance. Here is example (graphics may change in the GA release):

After you identify a table that you would like to port to use In-Memory OLTP, you can use the Memory-Optimization Advisor to help you migrate the disk-based database table to In-Memory OLTP. In SSMS Object Explorer, right click the table you want to convert, and select Memory-Optimization Advisor.

Limitations of SQL Server 2014 In-Memory OLTP

The In-Memory addition to SQL Server 2014 does not just expand the main features of the SQL Server, but rather it is a completely new engine. Just like any new software, the SQL Server In-Memory engine has limitations. Many things have to be planned and defined differently.

The below list is not full, but rather represents many major non-supported features for your consideration:

Server and Database limitations

- REPLICATION is not supported – Officially not supported, although in-memory tables can be defined as Subscribers (but not Publishers)

- MIRRORING is not supported

- DATABASE SNAPSHOT is not supported

- 250GB limit per server – Total data in all loaded in-memory tables cannot exceed 250GB

- MARS is not supported – Multiple Active Result Sets (MARS) is not supported with natively compiled stored procedures, so your application can’t use MARS connection to talk with the database

- Change Data Capture (CDC) is not supported

- DTC (distributed transactions) are not supported

- RTO (Recovery Time Objective) of your High Availability – the Starting and Recovery time is slower – For every database object (table, stored procedure) SQL Server has to compile and link the corresponding DLL file (that is loaded afterwards into the process space of sqlservr.exe), and this also takes some time. The compilation and linking is also performed when you are restarting your SQL Server, or when you perform a cluster failover.

SCHEMA, KEYS, INDEXes, TRIGGERS limitations:

- FIXED Schema – You have to design your in-memory tables with knowledge of your data size. Indexes, statistics, and blocks cannot be changed / applied later.

- ALTER TABLE for existing disk table is not supported – You can’t alter existing tables to become memory-optimized. You have to create new tables that are memory-optimized.

- ALTER TABLE for in-memory table is not supported – You cannot add another column to a memory-optimized table in your production.

- Row size limited to 8060 bytes

- FOREIGN KEY’s and CHECK CONSTRAINTS are not supported

- Datatypes:

- Datetimeoffset, Geography, Hierarchyid, Image, Ntext, Text, Sql_variant, Varchar(max), Xml, User data types (UDTs) – not supported

- CHAR and VARCHAR – Should be replaced to n(var)char

- Various not supported TABLE and COLUMN definitions: IDENTITY, ON (file group or partition), Data type [name], Computed Columns, FILESTREAM, SPARSE, ROWGUIDCOL, UNIQUE

- INDEX limitations: no COLUMNSTORE, CLUSTERED INDEX, no LOB datatypes

- DDL TRIGGERS and Event Notifications (Server and Database level) – Have to be removed before creating or dropping memory-optimized tables and/or natively compiled procedures

- LOGON TRIGGERS do not affect memory-optimized tables

- DML TRIGGERS cannot be defined on memory-optimized tables – You can explicitly use stored procedures to insert, update, or delete data to simulate the effect of DML triggers.

T-SQL non supported operators:

- Classic: OR, NOT, IN, LIKE, BETWEEN, CONTAINS, PERCENT, DISTINCT, NEXT VALUE FOR

- UNION’s are not supported

- MIN, MAX – limited to non strings

- LEFT / RIGHT / FULL OUTER JOIN – Outer joins return all rows from at least one of the tables or views mentioned in the FROM clause, as long as those rows meet any WHERE or HAVING search conditions – not supported (only Inner Joins are supported).

- FOR XML, FOR BROWSE

- Dynamic SQL (EXECUTE, EXEC) not supported

- CURSORs are not supported

- Sub-Queries are not supported

Transactions, Cross Database queries and

- BEGIN, COMIT, ROLLBACK are not supported – “Atomic Blocs” are an alternative

- Cross Database queries and transactions are limited – You cannot access another database from the same transaction or the same query that also accesses a memory-optimized table. You can create Table Variables, create two transactions: 1) insert the data from the remote table into the variable; 2) Insert the data into the local memory-optimized table from the variable.

- MERGE – A useful feature with performing insert, update, or delete operations on a target table based on the results of a join with a source table.

Functions, Views and Stored Procedures

- User-defined functions cannot be used in natively compiled stored procedures

- No In-Memory (“Native”) Functions

- VIEWs – Views cannot be accessed from natively compiled stored procedures.

- Disk-based tables cannot be accessed from natively compiled stored procedures.

T-SQL window functions are not supported at all. Examples:

- ROW_NUMBER()

- RANK()

- OVER (PARTITION BY …) or OVER (PARTITION BY …)

First conclusions about SQL Server 2014 In-Memory OLTP

Microsoft took a huge step with the SQL Server 2014 In-Memory OLTP, allowing developers to create high-speed and more scalable applications and databases. Embedding the new In-Memory OLTP engine inside SQL Server allows combining both classic disk-based tables and procedures together with the In-Memory tables and natively compiled stored procedures. This gives you amazing tools to create high speed enterprise and web-scale applications.

But the In-Memory OLTP has many limitations. It lacks support for many classically used T-SQL operators and functionalities, and many complications exist to migrate existing apps. The new In-Memory engine is not automatic to deploy, nor plug-and-play friendly. It’s designed to be carefully defined, developed and tested. Migration of existing applications to benefit In-Memory OLTP is possible but only in cases where you are able to develop and maintain the needed changes in the database and the application.

The SQL Server 2014 In-Memory OLTP is a great framework that is really focused on building new applications or new application-parts that will benefit from the In-Memory engine. For new apps it is also not a silver bullet solution to fit any problem, challenge or need, but rather a mission-specific tool.

In-Memory Dynamic Caching: An SQL Server 2014 In-Memory OLTP alternative or complimentary?

We have reviewed briefly the main in-memory data caching alternatives (data-grids, distributed caching, and dynamic caching). Of these, dynamic caching can be the easiest to deploy. Our examination of SQL Server 2014 In-Memory OLTP shows it to be a very interesting solution but one that has various limitations making it tough to use for existing production applications. So the obvious question is this: how do Dynamic Caching and the new SQL Server In-Memory OLTP engine compare? Are they complimentary? What’s best to use today?

So we can ground the conversation in reality, let’s compare the leader in dynamic caching for SQL Server, SafePeak, with SQL Server 2014 In-Memory OLTP.

SafePeak In-Memory Dynamic Caching

SafePeak is a query-level automated caching middleware for SQL Server based operational applications. SafePeak’s software solution combines three ideas:

- Result-based data caching for repetitive queries and stored procedures;

- In-memory databases that automatically keep transaction data integrity; and

- A solution that can fit immediately to production applications (including closed third party apps) with no code changes and minimal efforts for deployment.

SafePeak can accelerate the performance of applications and databases by In-Memory Caching of results from repetitive SQL queries and SQL stored procedures, while maintaining 100% ACID compliance and data integrity. SafePeak answers queries in microseconds (<0.001sec). SafePeak result-based dynamic caching prevents cached queries from hitting the database at all, thus eliminating the locks, blocks and spinlocks and drastically reducing usage and of I/O, CPU and Memory.

The SafePeak solution is software only. It doesn’t require any specialized hardware. And it doesn’t require any changes to apps or databases to deploy (unlike data grids). It’s based on “smart auto-learning”, where all queries and stored procedures are analyzed automatically. It identifies and analyzes logic and dependent objects (like tables, views, triggers, procedures, etc.) for you. And as applications evolve, so too does cached data.

Critically, it has advanced data integrity and eviction processes. SafePeak will determine if an incoming query is an update, insert, alter or any other request that may cause a change in the database. In this case, SafePeak dissects the request and decides which tables in the database may be affected by its execution. It then looks at the query results stored in the Cache Manager and evicts all results that have any connection to the affected database tables.

Besides the auto-pilot mechanism, SafePeak’s management dashboard allows you to tune and refine caching effectiveness.

For example, with a good understanding of the application, a user can configure caching rules for certain SQL queries, stored procedures or even tables and views to have reduced cache invalidation sensitivity to table updates, in order to keep the data in cache. Consider a use-case like “Get details of order #123” command, where the result does not change with entrance of new orders.

Another example is a “cache warm-up” mechanism, in which you create a list of queries that are scheduled to enter SafePeak’s Cache.

SafePeak and SQL Server 2014 In-Memory OLTP

Now that we’ve looked at SafePeak’s software for dynamic caching, the following chart lets us quickly compare it to SQL Server 2014 In-Memory OLTP.

| Attribute | SQL Server 2014In-Memory OLTP | SafePeakIn-Memory Dynamic Caching |

| Data In-Memory | All table data | Hot Data only |

| Type of data in memory | Raw tables data | Result-sets(of Queries and Procedures) |

| RAM requirements | High ** All table data is loaded to memory | Medium * * Only Hot and Computed data is in-memory |

| Middleware | No | Yes |

| Response time from memory | Micro to milliseconds | Microseconds |

| Fast speed for “first query call” | Yes | No * * Possible via cache warm-up |

| Read:Write ratio affects the Read efficiency performance | No | Yes |

| READ queries acceleration | High | High |

| WRITE queries acceleration | High | Low |

| No Locks on tables | Yes | No* Locks are reduced but only due to less load on the server |

| SQL Server Support | 2014 only | 2005, 2008, 2012(2014 to be supported during 2014) |

| Full T-SQL and Schema support | No, has many limitations | Yes |

| Replication, Mirroring support | No | Yes |

| Database / Application Migration requirements | Complex | None |

| Time to migrate and deploy for existing production application | Long | Short |

| Fits 3rd party applications (SharePoint, Dynamics…) | No | Yes |

The initial comparison shows that SafePeak In-Memory Dynamic Caching is a better fit for general cases of production and custom applications (including OLTP and Reporting applications), especially where there are significantly more reads than writes. The SafePeak technology also accelerates 3rd-party applications like Microsoft SharePoint, Microsoft Dynamics, or BMC Remedy for example.

SafePeak focuses on caching Reads, but has also a limited in-direct acceleration effect on Writes.

As a by-product, SafePeak also can improve the scalability of SLQ Server databases, thanks to two situations:

- Reads no longer tax database I/O, CPU or memory resources – which is especially helpful to databases that are serving “chatty” applications;

- Databases have freed-up resources now available to serve more users and more transaction workloads.

The SQL In-Memory OLTP engine is focused on loading specific tables into memory, creating specific queries and procedures calls for them and getting great performance on those tables. However many changes may be required to the database and the application. Performance and scalability improvements for very specific tasks, like Writes or Reads that are always-in-memory, can be much higher than SafePeak, but these improvements come with time and development costs. Also, SQL Server In-Memory OLTP engine does not answer all complex load and performance challenges.

The best answer probably is that both SafePeak Caching and the Microsoft In-Memory OLTP can be either chosen for different tasks or complement each other:

- Where SafePeak “virtualizes” and accelerates the hot-data from all most-accessed tables, views and procedures, and

- Where the SQL Server In-Memory OLTP boosts performance of a few very intensive write-and-read tables.

This has been a long article, but I hope it helps you better understand how In-Memory Data Cache solutions can address your SQL Server database performance and scalability.

Reference: Pinal Dave (https://blog.sqlauthority.com)

10 Comments. Leave new

It is an exact copy of script given by Microsoft. Why bother?

Is the 250GB limit for the aggregate of all “in memory” tables or only the durable ones? The documentation that Microsoft provided specifically mentions durable tables. If you have “SCHEMA_ONLY” tables does this limitation still apply? Microsoft’s guidance mentions the 250GB limitation is likely to mean that the in memory footprint could be 500GB. So, does this apply to “SCHEMA_ONLY” tables…could you fit more data using non-durable in memory tables. Thanks in advance for your response.

Hi Stew. I have been searching for answer to this question. Were you able to find an answer?

Hi Pinal,

I have problem with slow running query

Query is running in 4 sec in Dev server but in the Production server it is taking 17 second,Could you please help me on this

Hi Pinal,

Is it still true that you can’t use Left / Right / Full outer join ? I just try on a test server and it seems to work … dunno if I did wrong :/

Hi Pinal,

I can test the performance benefits using insert operation from natively compiled sps.

Could you please provide me the example of update operation how it works.

If compared to SAP HANA in memory why should SQL server 2014 in memory is better? can u tell me some reasons or limitations?

IF these operator are not supported how i achive the pagging.

Classic: OR, NOT, IN, LIKE, BETWEEN, CONTAINS, PERCENT, DISTINCT, NEXT VALUE FOR

Which is the best database data capture tools for sql server 2014 Web edition ?

Dear Pinal Dave , your site is very informative and i found every thing what i search for about sql server , thanks for your hard work , and keeping upto date this webiste..

thanks

Mumtaz Ali