I know from my personal experience that many SQL Server novice users have issues while uploading and downloading data in various formats. In my practice, this kind of tasks arise very often. I would prefer to automate the process for the recurring tasks, if the file structure is not going to be changed. I can create a SSIS package, deploy it on a server and then schedule one through the SQL Agent. Let us see Easy Way to Import and Export SQL Server Data.

In practice, clients operate with numerous data formats (excel and xml). In some cases, Import and Export Wizard included in Microsoft SQL Server Management Studio helps greatly. However, I prefer dbForge Data Pump for SQL Server a new SSMS add-in from Devart. The tool allows me to quickly and easily upload and download data in a variety of formats.

Recently, I needed to upload data from a large XML file with quite simple structure:

<users> <user Email="joe.smith@google.com" FullName="Joe Smith" Title="QA" Company="Area51" City="London" /> </users>

Let’s compare three approaches to resolving the problem: SSIS, XQuery, and Data Pump. We cannot use the SSMS Import and Export Wizard as it does not work with XML.

- SSIS

In Integration Service, create a new Data Flow Task:

On the Data Flow tab, select the XML data source. Then we need to specify the XML file to load data from and generate the XSD schema:

Since all XML values are stored in the Unicode, I need to convert them to ANSI for the following insertion:

Then we need to specify where to insert data:

We need to specify the table to insert data to. In this example, I created a new table.

Then just press the Execute Results and check the package for errors:

The SSIS package creation process took 10 minutes. The package ran for 1 minute.

- XQuery

Often, writing a query is much faster than creating a SSIS package:

IF OBJECT_ID('dbo.mail', 'U') IS NOT NULL

DROP TABLE dbo.mail

GO

DECLARE @xml XML

SELECT @xml = BulkColumn

FROM OPENROWSET(BULK 'D:\users.xml', SINGLE_BLOB) x

SELECT

Email = t.c.value('@Email', 'VARCHAR(255)')

, FullName = t.c.value('@FullName', 'VARCHAR(255)')

, Title = t.c.value('@Title', 'VARCHAR(255)')

, Company = t.c.value('@Company', 'VARCHAR(255)')

, City = t.c.value('@City', 'VARCHAR(255)')

INTO dbo.mail

FROM @xml.nodes('users/user') t(c)

It took me 3 minutes to create the query. However, the execution time exceeded 9 minutes. The reason is that parsing server-side values is quite expensive.

- dbForge Data Pump for SQL Server

Now’s the time to try Devart’s Data Pump.

In the Database Explorer shortcut menu, select Import Data.

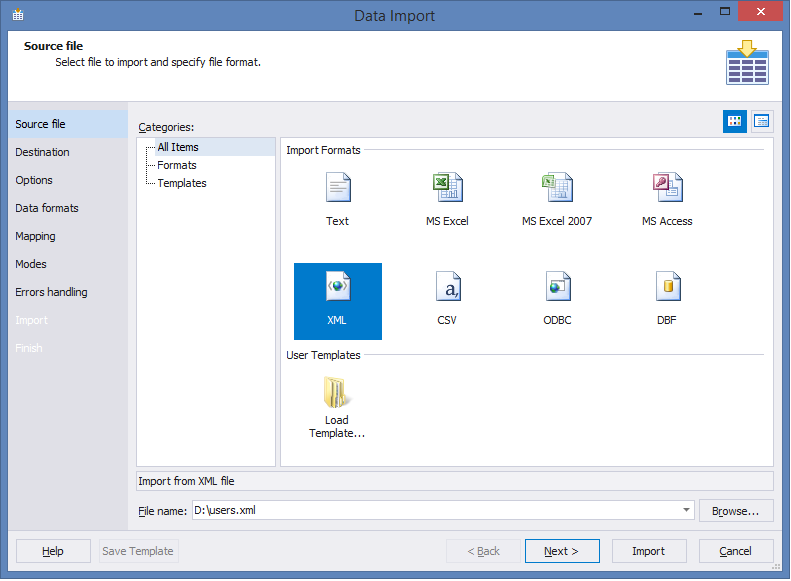

Then we need to specify the file type and the file path.

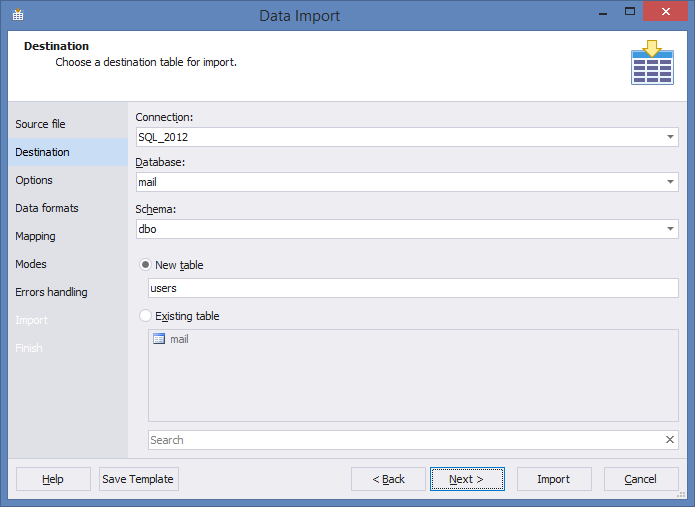

Then we can select a table to insert data. The table can be created automatically.

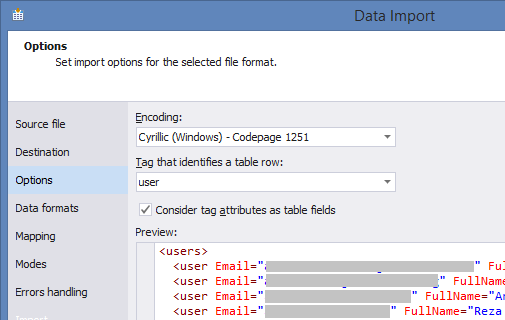

Select the XML tag for parsing:

Now we can select what columns we need (from the selected tag).

Now we need to check the Use bulk insert option to reduce the load on the log file when inserting data.

Click Import and that’s it! The whole process took 2 minutes. This approach does not require any special qualification. The tool allows me to quickly and easily upload data in any formats convenient for my clients.

Reference: Pinal Dave (https://blog.sqlauthority.com)

7 Comments. Leave new

Hi,

is it possible to use Data Pump to automate imports / exports?

Thank you,

Roberto

No, if you mean working with SQL Agent. Now, Data Pump has the option to save templates, which can be further used for import/export. By the way, command line will be supported in the next release.

Hi .. is Data Pump vastly better than the standard offering from SSMS .. also, this then raises the question on when you let users have access to SSMS to do such work for themselves .. ie should all power users outside of IT departments, have access to SSMS with suitable training?

Would appreciate your thoughts

Regards

Ian

Hi Pinal,

We have a scenario like we want to upgrade our core product to higher version. We are using SQL 2012 and upgrade includes some schema changes, data migration and data conversion.

What will be the best option to perform database upgrade with minimal downtime.

Following are the options we discussed.

1. Do upgrade with a planned downtime of 12 to 18 hours (because of large data)

2. Create a backup of existing DB and restore on another server then perform upgrade without affecting production and swap the servers after upgrade.

2nd option is ideal but the problem is how we can get the delta updates happend on production after the backup and till the restore completion time on the new DB server (around 20 hrs of data). If we have the delta changes then we can easily update new DB with that and swap the production DB with new DB.

Our main aim is to reduce the downtime.

DB upgrade will take 12 to 18 hrs based on the upgrade we did in a Test environment with same amount of data.

Please share your ideas.

Thanks in advance.

For me is a surprise that the SSIS package (that add one layer to the process) is faster than the native XML implementation from SQL server.

Booth executions has been done in the same machine (server)?

Pinal, I just wanted to thank you for all your blog posts. You’ve elevated my career throughout the years and although embarrassingly I’ve never commented on any of your posts, you’ve been a savior and a great teachers. I almost always find your posts very easy to understand and so well presented. Btw great display picture too!.

Best regards

Vijay