I was recently working on a performance tuning project in Dubai (yeah I was able to see the tallest tower from the window of my work place). I had a very interesting learning experience there. There was a situation where we wanted to receive the schema of original database from a certain client. However, the client was not able to provide us any data due to privacy issues. The schema was very important because without having an access to underlying data, it was a bit difficult to judge the queries etc. For example, without any primary data, all the queries are running in 0 (zero) milliseconds and all were using nested loop as there were no data to be returned. Even though we had CPU offending queries, they were not doing anything without the data in the tables. This was really a challenge as I did not have access to production server data and I could not recreate the scenarios as production without data.

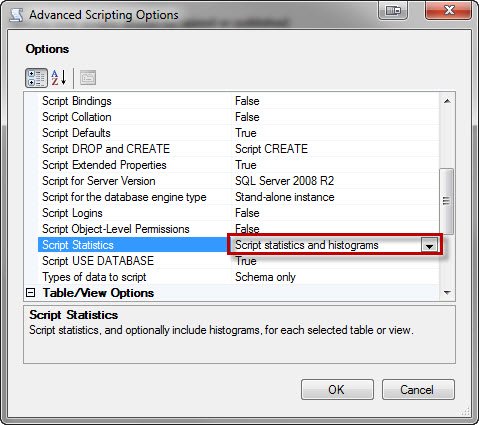

Well, I was confused but Ruben from Solid Quality Mentors, Spain taught me new tricks. He suggested that when table schema is generated, we can create the statistics consequently. Here is how we had done that:

Once statistics is created along with the schema, without data in the table, all the queries will work as how they will work on production server. This way, without access to the data, we were able to recreate the same scenario as production server on development server.

When observed at the script, you will find that the statistics were also generated along with the query.

You will find statistics included in WITH STATS_STREAM clause.

What a very simple and effective script.

Reference: Pinal Dave (https://blog.sqlauthority.com)

18 Comments. Leave new

Pretty Neat!

Thx, i remember requesting that one a year ago :D

nice trick !

Really Superb trick

nice!

Indeed, very simple, yet excellent trick! I am going to try that tomorrow itself to see how this works with our development databases. This has tremendous potential in performance tuning when you don’t have enough space to get all the data in-house or have security considerations (as in your case).

Simply wonderful! Thank-you!

This is really a neat script, just by getting statistics how once can simulate production environments..

Thank you

Ramdas

Wow, this is a nice way. Thanks Pinal!

This is very help full

very help full man

This is neat. I wonder though – since SQL Server auto updates statistics when they are more than 20 percent out of date – the first query run would use the fake stats while the engine updates the stats for the table on a separate thread. The second query run against the table would then use updated stats which would nullify the intentions of the fake stats.

Thanks Pinal.May be next time in during production issue I will recommend this. Till now I was recommending on stimulating the statistics in dev or test environment by deceiving the optimizer.SQL Server 2005/2008 gives that functionality.

that really good trick. Very cool. Thanks Pinal!

Great topic yet again!

In SQL PSS every perf engg sure would have crossed this.

An additional thing i used to do is create a test DB from the script and change DB state to READ_ONLY, just to be sure that stats don’t get modified during t/s.

Remember that your production environment will (for the most part) have different hardware than your Dev/Test environments. Therefore the Query Plans can differ slightly (seldom significantly) from what you see replicated in DEV to what SQL Engine will do in PROD.

Thank you Pinal Dave.

Dave, would you recommend or be against. Using this after migrating a SQL 2005 database to 2012. I am worried of the slowness my users will encounter when they start using the new database. If I can move over the stats, it would be much faster for them. I was looking for a post that would state, move statistics from one server to another, during a migration upgrade.